Trust by design: Understanding the Einstein Trust Layer in Salesforce

The Einstein Trust Layer is Salesforce’s answer to one of the biggest questions in enterprise AI: how do you use generative AI without losing control of your data?

As Salesforce adds AI features across sales, service, marketing, and analytics, you need strong guarantees around data privacy, security, and compliance. Sending sensitive customer data directly to large language models is not acceptable for most organisations… and that includes yours probably too.

That is why Salesforce introduced the Einstein Trust Layer.

What is the Einstein Trust Layer in Salesforce

The Einstein Trust Layer is Salesforce’s built-in framework for using artificial intelligence safely and responsibly.

It was designed to make sure generative AI can work with your data without putting trust, security, or privacy at risk. As AI becomes part of everyday Salesforce workflows, this trust becomes even more critical.

The Einstein Trust Layer acts as a secure barrier between your Salesforce data and AI models. It ensures that sensitive customer data stays protected while still allowing AI features to deliver real value.

If you use Salesforce and want to use AI, the Einstein Trust Layer is what makes that possible at an enterprise level. It keeps data governance, compliance, and control in place.

The power of the Einstein Trust Layer

The Einstein Trust Layer aims to solve a core problem with generative AI in most businesses: most AI models are not designed to handle your sensitive enterprise data safely.

Traditional AI integrations often send raw business data directly to external LLMs. This creates serious risks, especially when that data includes customer details, contracts, or internal information stored in Salesforce.

Not cool.

The Einstein Trust Layer was designed to remove these risks while still allowing AI to deliver value. This is where the power of the Einstein Trust Layer features truly come into play:

- It prevents sensitive Salesforce data from being exposed to AI providers

- It ensures customer data is not used to train public AI models

- It gives organisations control over how AI is used inside Salesforce

- It supports enterprise compliance requirements such as GDPR

Salesforce has always positioned trust as a core value. As AI became more powerful and more embedded in the platform, Salesforce stayed close to that core value by extending that trust model into AI through the Einstein Trust Layer.

How the Einstein Trust Layer works

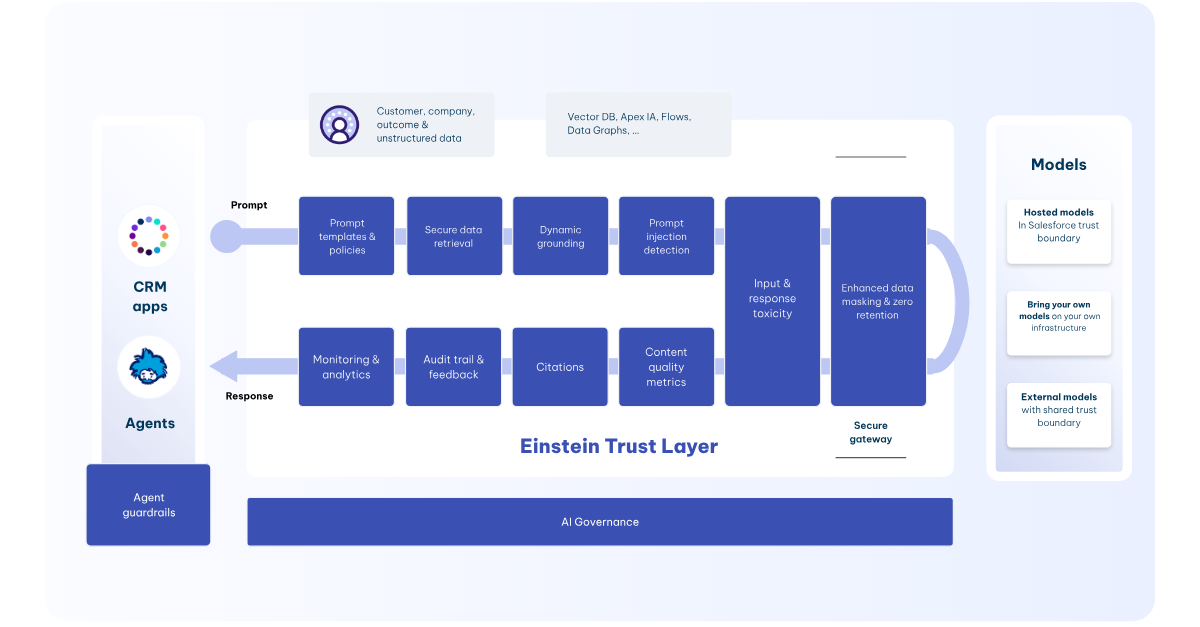

The Einstein Trust Layer works by sitting between Salesforce data and the AI models that generate responses.

Every AI interaction in Salesforce follows a controlled process. Nothing bypasses the Einstein Trust Layer. Here is how that process works step by step:

Step 1 - User Prompt: A user asks Agentforce a question or requests an action.

Step 2- Dynamic Grounding: The Trust Layer checks which data the user is allowed to access and only fetches that data.

Step 3- Data Masking: Sensitive information is identified and masked by the Einstein Trust Layer.

Step 4 - Prompt Defense: Guardrails are added to the prompt.

Step 5 - LLM Processing: The masked, grounded prompt is sent to the LLM for processing.

Step 6 - Toxic Language Detection: The response is scanned for inappropriate content by toxic language detection.

Step 7 - Data Demasking: Masked data is restored for authorized users.

Step 8 - Audit Trail: Every step is logged for compliance and review.

Data privacy and data masking in the Einstein Trust Layer

Data privacy is a core function of the Einstein Trust Layer in Salesforce.

When AI features need business context, Salesforce doesn't send raw customer data directly to AI models. Instead, the Einstein Trust Layer applies data masking automatically.

Data masking means:

- Sensitive fields such as names, email addresses, phone numbers, and IDs are hidden or replaced

- AI models only see the minimum information needed to generate a response

- Personal and confidential data remains protected inside Salesforce

This masking happens in real time. You don't need to configure it manually, which is nice. The Einstein Trust Layer applies consistent privacy rules across all AI interactions.

By masking data before it reaches AI models, Salesforce reduces the risk of data leakage while still allowing AI to be useful. This is critical for industries that handle regulated or sensitive information.

Zero data retention in the Einstein Trust Layer

The Einstein Trust Layer in Salesforce is designed to ensure that customer data is not stored or reused by AI models.

When a prompt is sent to an AI model, Salesforce enforces zero data retention. This means the data used in AI requests and responses is not saved by the AI provider and is not used for future model training.

Zero data retention ensures:

- Salesforce customer data is not stored outside the platform

- Prompts and responses are not reused by third-party AI models

- Business and customer information remains private

This is a key difference between enterprise AI in Salesforce and consumer AI tools. Many public AI tools retain data to improve their models. The Einstein Trust Layer prevents this by design.

By enforcing zero data retention, Salesforce protects intellectual property and sensitive customer information while still enabling generative AI features.

The Einstein Trust Layer allows organisations to adopt AI with confidence, knowing their data is not leaving their control.

Conclusion

The Einstein Trust Layer is the foundation that makes AI usable in Salesforce without compromising trust.

As generative AI becomes part of everyday business workflows, organisations need strong guarantees around data privacy, security, and compliance. The Einstein Trust Layer provides those guarantees by design.